By Adam Gardner, Head of Products

Artificial Intelligence (AI) is seemingly everywhere, and it’s here to stay. This is causing a rethink of just what an AI data centre looks like. What we do know is that large language models, chatbots, machine learning applications and neural networks run on high-density computers with heightened requirements for power, cooling, security and interconnection.

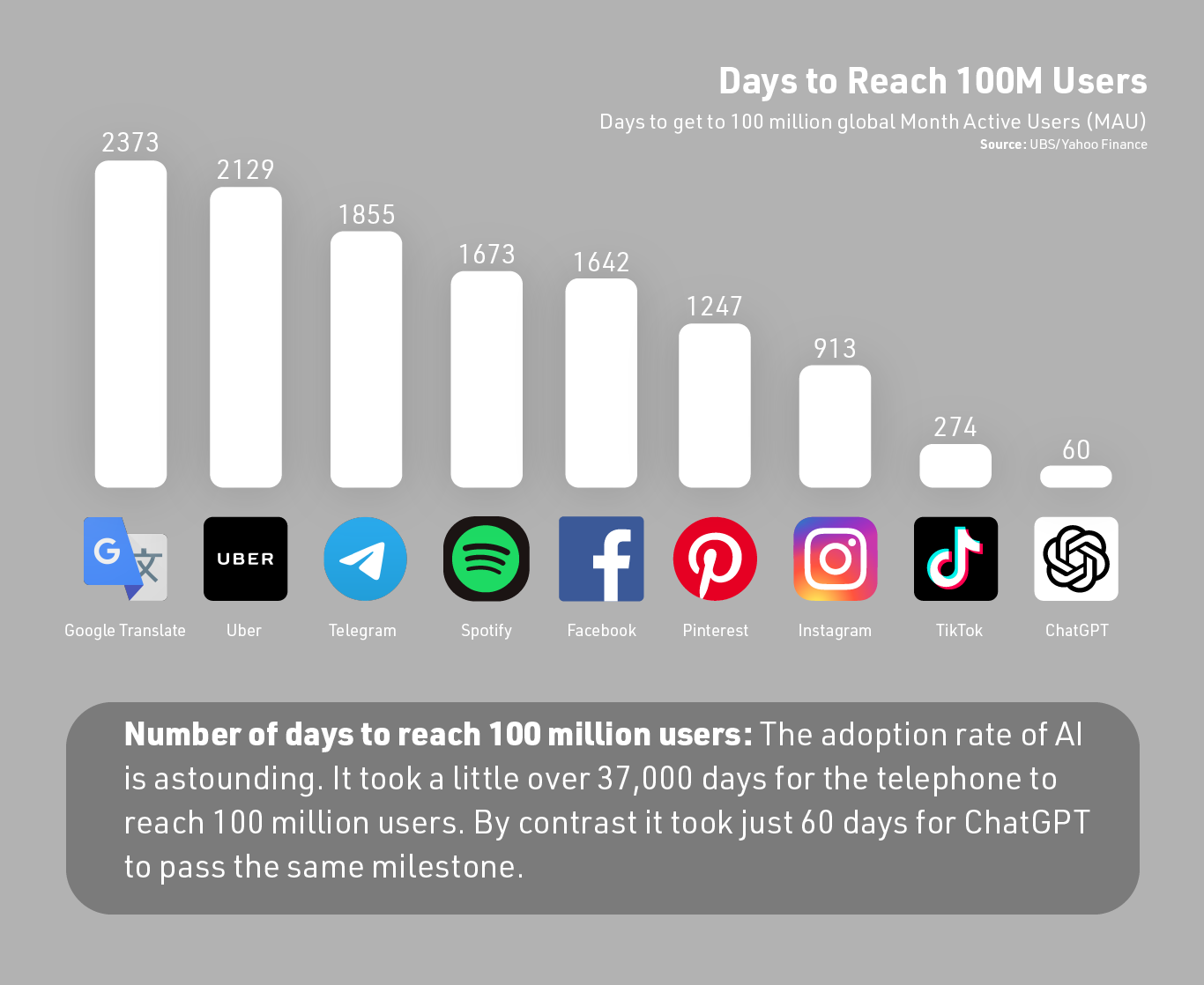

Most of us have heard of ChatGPT. Indeed, it would appear most of us have already used the remarkable new AI tool from OpenAI. It garnered a million users in just five days after launching in November 2022 and boasted over 100 million users by January 2023.

Google and Facebook owners, Alphabet and Meta respectively, are also making big plays in the generative AI space.

Riding this innovation wave, many organisations are now also investing in this deep learning digital megatrend. According to IDC, global AI spending is expected to double to more than US$300B annually by 2026.

Just about every industry is seeing exciting applications of artificial intelligence technology in real-time analysis of large data sets to inform operational decisions. Whether in the resources sector, retail, agriculture, healthcare or financial services, there is a rush to capitalise on enormous opportunity to achieve business objectives but they will need a specialist AI data centre.

Nothing artificial about AI infrastructure challenges

No matter where you are in your AI journey, it’s important to recognise that leveraging real-time data analysis requires a rethink of data centre and interconnection strategy, and it pays to address that up-front.

AI applications require high-density (HD) compute, low latency interconnection and are often associated with an edge strategy to get data and infrastructure closer to users. This all puts additional pressure on data centre specifications.

AI is fuelled by data – and lots of it – so those HD servers will require more power delivered to each rack and more cooling capability to manage additional heat generated. You’ll also need to ensure infrastructure is scalable, flexible and sustainable, in order to move in lockstep with growth and sustainability strategies.

It’s clear the vast majority of private data centres are not designed for the task of handling today’s high density workloads – let alone tomorrow’s.

Five essential AI data centre considerations

Not all data centres are created equal. Consider each of the following before deciding where and how you are going to host critical digital infrastructure supporting artificial intelligence.

Data centre location

Location is everything when it comes to architecting AI infrastructure. Proximity to the data source and its users are key considerations. In general, AI is dependent on low latency. So, it pays to minimise the distance, and number of network hops, between where data is captured, stored, processed and utilised. Not to mention access to the digital services ecosystem supporting your AI objectives (see point 4.).

The greater consideration is the strength and geo-diversity of the overall data centre platform and network. When you colocate your infrastructure in an established network of data centres, you are only ever one interconnection away from the clouds, carriers and services required to run your applications.

Power and cooling

Infrastructure resilience is critical. As discussed, artificial intelligence requires highly dense computing to process large volumes of data in real time. Faster processing uses more power and generates more heat.

As you progress on your AI journey, uptime objectives will revolve around the ability to keep HD servers continuously powered and cooled. This has major implications for data centre selection. You must have 100% confidence your current set-up has complete fault tolerance around power and cooling.

Security and sovereignty

As AI becomes more integral to your business, the consequences of security breaches (particularly those involving sensitive consumer data) become increasingly severe. From regulatory sanctions and reputational damage – right through to “game-over” in a worse-case scenario.

It’s essential your data centre partner has a holistic view of security risk management, encompassing not just cybersecurity but also physical, supply chain and personnel security – also known as an “All Hazards” approach.

Another key consideration is data sovereignty, or where your data lives. Increasingly, Australian consumers expect their data to be kept on Australian shores, where it is subject to local laws and privacy regimes. Your data centre should be part of a broad sovereign ecosystem residing within a geo-diverse, national infrastructure platform.

Ecosystem accessibility

You’re going to be moving a lot of data around to capture the full value of AI, and you’re going to want access to the full range of cloud platform providers, carriers and digital service providers to extract value from that data.

The most secure, cost-effective and high-performance way of doing that is not to build the ecosystem yourself, but to embed yourself into an established interconnected ecosystem.

Sustainability and cost

With power and cooling requirements ballooning and the cost of power rising, AI could easily prove to be a major burden on your organisation’s carbon reduction targets and power bill. So, it pays to partner strategically with data centres that set industry benchmarks for energy efficiency performance.

Data centres are power intensive by nature. AI takes it to the next level. So, everything you can do to limit power consumption will ultimately reduce the impact you have on future generations and improve your bottom line. Make sure you talk to your partner about any work they are doing to improve energy efficiency and deliver carbon-neutral data centre services with the help of Commonwealth-accredited carbon offsets programs.

Thinking AI? Think infrastructure first

If you are thinking about how AI and other emerging technologies might apply to your organisation over the next few years, now is the time to make critical decisions about the digital infrastructure that will support it.

Reach out to NEXTDC if you would like to find out more about planning tomorrow’s critical digital infrastructure.