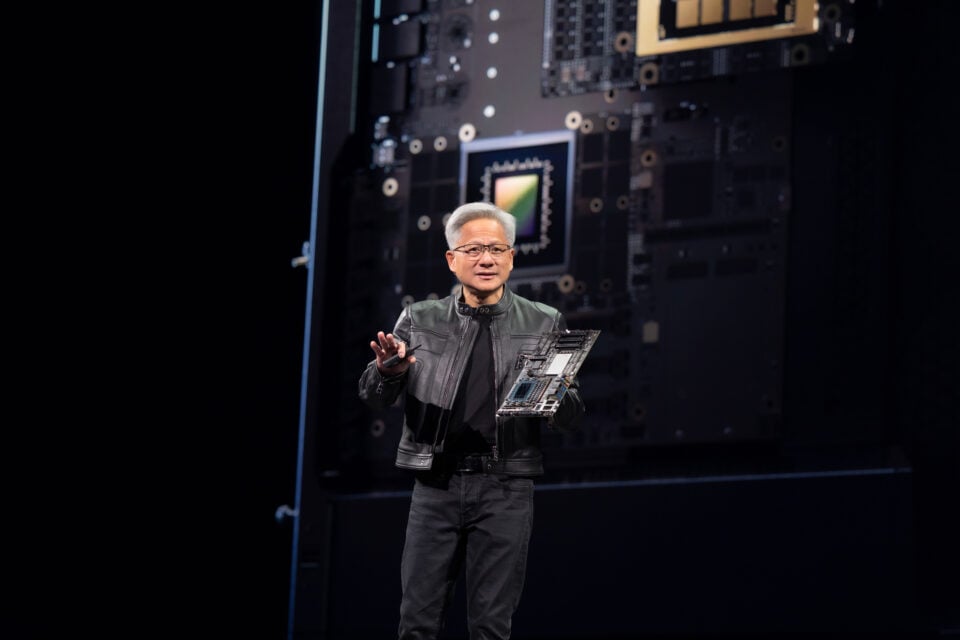

Jensen Huang’s Vision for the Future of Infrastructure, Intelligence, and Innovation

June 11–14, Paris | NVIDIA at VivaTech 2025

At this year’s VivaTech conference, NVIDIA founder and CEO Jensen Huang delivered a powerful message: infrastructure is now the innovation curve. As the AI era enters a new phase defined by reasoning, robotics, and relentless scale, the architecture of intelligence is being reimagined from the ground up.

According to Huang, the traditional data centre has evolved. What used to store and serve data is now tasked with generating tokens—the new raw material of intelligence. These facilities aren’t just housing compute—they’re AI factories, and they’re poised to become the economic engines of the digital age.

From the debut of Grace Blackwell—NVIDIA’s most powerful AI system—to breakthroughs in quantum computing, digital twins, and humanoid robotics, VivaTech 2025 made one thing clear: infrastructure is no longer a cost centre. It’s the product line.

Here’s what that means—and how NEXTDC is already building for it:

From Data Centres to AI Factories

“These aren’t data centres. These are factories that manufacture intelligence.”

— Jensen Huang, NVIDIA

NVIDIA’s vision for AI infrastructure is rooted in a fundamental shift: from serving to thinking. The modern AI factory doesn’t simply run workloads—it generates, simulates, reasons, and adapts continuously. This calls for a radical redefinition of infrastructure design and operations.

NEXTDC’s AI Ready data centre ecosystem is engineered for this future:

- 600kW+ rack capacity to support parallel, high-context GPU clusters

- Liquid and immersion cooling systems for thermal control at scale

- Tier IV-grade operational resilience for enterprise-grade uptime

- Digital twin-enabled environments for predictive orchestration and continuous optimisation

These capabilities form the operational substrate for large-scale inference, simulation-driven R&D, and reasoning workloads. This is how modern AI factories are built—and it’s how NEXTDC enables organisations to move from deployment to production with confidence and velocity.

Agentic AI: The Rise of Digital Reasoning Machines

Huang highlighted the emergence of agentic intelligence—AI systems that not only generate responses but reason through tasks, adapt using memory, and execute multi-step plans using tools. These agents:

- Perceive multimodal input (text, images, voice, video)

- Plan and coordinate actions autonomously

- Reflect and self-improve with memory and context

- Operate across public, private, and hybrid cloud environments

This transition from generative AI to agentic AI represents a new class of workload—one that demands continuous inference, dynamic scaling, and near-zero latency.

NEXTDC’s infrastructure is ready for this class of compute. Our facilities are designed to support the distributed architectures that agentic AI demands—spanning cloud adjacency, sovereign AI deployment, and seamless integration across toolchains and models.

The New Economics of Intelligence: Tokens as Throughput

“The more tokens you can generate—and the faster you can reason—the more revenue your factory produces.”

— Jensen Huang

.png?width=800&height=450&name=NEXT%20Generation%20Media%20%26%20Broadcast%20Technology%20Leadership%20Summit%202025%20AI%2c%20Cloud%2c%20and%20Infrastructure%20Driving%20the%20Future.%20(5).png)

One of the most impactful themes from Huang’s keynote was the economics of token generation. In the AI factory era, tokens—units of intelligent output—have become the product. And like any production environment, throughput equals value.

The faster your infrastructure can generate high-quality tokens (e.g., words, images, actions, plans), the greater your ability to scale services, insights, and automation. This shifts AI infrastructure from a technical asset to a strategic revenue platform.

At NEXTDC, we’re optimising every layer of the AI production pipeline:

- Dense, low-latency networking

- Power-efficient liquid cooling

- Secure and compliant data handling

- Interconnect-ready designs for ultra-scale GPU clusters

AI is no longer something you build on infrastructure. It is the infrastructure.

Grace Blackwell and the Infrastructure to Match

The standout announcement at VivaTech was Grace Blackwell (GB200)—a 2-ton, liquid-cooled AI superchip operating as a unified virtual GPU. Designed to support next-gen reasoning models, GB200 delivers:

- 130 TB/s of NVLink bandwidth

- Integrated CPU-GPU compute trays

- Ultra-dense rack design for parallel inference and real-time decisioning

- Interconnected MVLink “spine” exceeding global internet throughput

To support this calibre of compute, infrastructure must meet new thresholds for energy, interconnectivity, and physical design. NEXTDC’s blueprint already anticipates these needs—enabling AI-first enterprises, hyperscalers, and governments to deploy at pace.

Global Infrastructure Race: Europe Wakes Up, APAC Leads

NVIDIA confirmed that Europe will grow its AI compute capacity tenfold by 2027, with over 20 AI factories now in development. This surge is a strategic response to digital sovereignty, AI competitiveness, and data residency demands.

In APAC, NEXTDC is already ahead of the curve. We are delivering scalable, sovereign-ready infrastructure that supports national AI agendas, industry R&D, and next-gen cloud-native workloads.

Just as Europe rallies to protect its AI future, Australia is building its own foundation for long-term leadership—and we’re proud to be driving that transformation.

NeMo, NeMoTron, and the Agent Stack

NVIDIA unveiled its NeMo platform—an open framework for building enterprise-grade AI agents using:

- Open-source LLMs (e.g., Mistral, LLaMA, NeMoTron)

- Context extension and reasoning optimisation

- Post-training, fine-tuning, and deployment flexibility

- Toolchain integration for reinforcement learning and AI ops

These aren’t just models—they’re digital employees, and they need highly available, secure, sovereign-compliant environments in which to think and operate.

NEXTDC provides the AI factory floor where agents go to work—powering services, workflows, and customer experiences at scale.

Digital Twins, Robotics, and the Next Frontier

What began with photorealistic graphics is now reshaping industrial design. Huang’s keynote showcased Omniverse-powered digital twins as the simulation layer for AI:

- Photoreal, physics-based environments

- Real-time motion planning for humanoid robots

- End-to-end design for fusion reactors, autonomous factories, and robotic logistics

AI agents are now being trained in virtual twins before being deployed in the physical world. At NEXTDC, our facilities act as the operational mirror of these simulated environments—delivering the power, connectivity, and control required to bridge virtual and real.

Looking Ahead: Quantum-Classical Convergence

Another announcement that hints at what’s next: CUDA-Q and the advent of hybrid quantum-classical computing. With QPUs now designed to interoperate with GPUs on workloads such as molecular modelling and physics simulation, the AI infrastructure stack is poised to evolve yet again.

NEXTDC’s modular, future-ready approach ensures we can integrate emerging compute models—supporting innovation that hasn’t yet hit the mainstream.

Meet Greck: The Arrival of Humanoid AI

In one of the most captivating moments of the keynote, Huang introduced Greck, a humanoid robot trained entirely in NVIDIA’s digital twin environment. Greck walked, jumped, responded to language—and even posed for selfies.

It was a compelling demonstration of where AI is headed: embodied, intuitive, and deeply integrated into our daily lives. Supporting this future requires infrastructure that’s as adaptive as the intelligence it powers.

Ready to Build Your AI Factory?

NVIDIA’s keynote delivered a bold vision. At NEXTDC, we’re making it real.

Whether you’re scaling LLM inference, enabling agentic AI, running multimodal workflows, or deploying sovereign cloud infrastructure we’re ready to help you build an AI factory that works harder, faster, and smarter.

Talk to an AI Infrastructure Specialist

Let’s build the future of intelligence together.