What AI Means for Infrastructure: Key Insights from Jensen Huang’s COMPUTEX 2025 Keynote in Taiwan

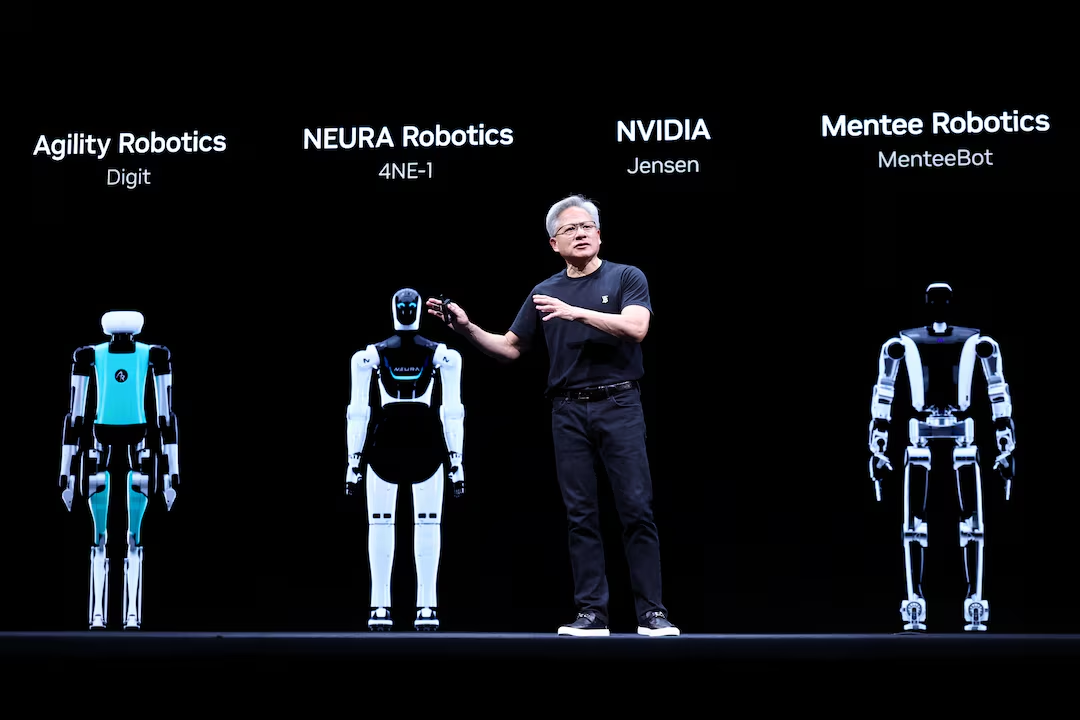

When NVIDIA CEO Jensen Huang steps on stage, he rarely just announces new hardware. At COMPUTEX 2025, he outlined a sweeping transformation in how the world builds and operates IT, from chips and supercomputers to robots and factories.

Here’s what was announced, what problems it solves, and what it means for technology leaders today.

From Data Centres to AI Factories

What was announced:

Huang reframed the modern data centre as an “AI factory” — a production system that turns energy into intelligence. Rather than simply processing workloads, these AI-native infrastructures generate outputs in the form of tokens — the foundational units of large language models.

Problem it solves:

Traditional data centres were never built for generative AI at scale. They struggle with high-throughput inference and lack the agility needed for multimodal AI applications.

Why it matters to CIOs and CTOs:

These AI factories are designed for real-time reasoning and scalable output — the new benchmarks for digital competitiveness. If you’re modernising your infrastructure, this is the benchmark for the next decade.

Grace Blackwell: Performance for Real-Time Reasoning

What was announced:

The Grace Blackwell GB300 platform — NVIDIA’s most powerful AI system to date — features a liquid-cooled rack with 40 petaflops of performance, 1.5x more inference power, and 2x networking bandwidth compared to its predecessor. One node now rivals the 2018-era Sierra supercomputer.

Problem it solves:

Today’s AI requires fast, iterative reasoning — not just static training. Most infrastructure can't keep up with inference demands, especially for large, multimodal models.

Why it matters to CIOs and CTOs:

If you’re building applications with conversational AI, retrieval-augmented generation, or digital agents, your inference stack matters more than training. Grace Blackwell is optimised for fast thinking at scale — a major leap for any business deploying production AI.

MVLink Fusion: Scalable, Modular AI Infrastructure

What was announced:

MVLink Fusion allows organisations to build semi-custom infrastructure by mixing NVIDIA GPUs with custom CPUs, TPUs or ASICs. It standardises interconnects while allowing flexible architecture.

Problem it solves:

Most high-performance infrastructure is tightly coupled to a vendor stack. Integrating custom accelerators or third-party processors at scale has been difficult and expensive.

Why it matters to CIOs and CTOs:

You’re no longer boxed into a one-size-fits-all architecture. MVLink Fusion makes it possible to build an AI infrastructure around your workloads, not the other way around — while still accessing NVIDIA’s ecosystem of performance, software and tooling.

RTX Pro Server & DGX Spark: AI for the Enterprise Edge

What was announced:

- RTX Pro Server supports hybrid enterprise workloads, combining x86 virtualisation and AI-native apps.

- DGX Spark is a desk-side AI workstation with 1 PFLOP of compute and 128 GB RAM — the same performance as NVIDIA’s first DGX supercomputer from 2016.

Problem it solves:

Not every workload belongs in the cloud. Developers need fast, local experimentation. And enterprises need systems that can bridge classical IT and AI workloads.

Why it matters to CIOs and CTOs:

Whether you're deploying AI agents, building private LLMs, or modernising virtual desktops — these systems let you own the hardware and control the stack, without sacrificing power or flexibility.

Agentic AI: From Copilots to Digital Workers

What was announced:

Huang introduced a new class of AI: agentic AI, digital agents that can perceive, reason, and act. These agents break down goals, use tools, collaborate with other agents, and make decisions independently.

Problem it solves:

Enterprises face labour shortages, rising overheads, and productivity plateaus, particularly in repetitive and decision-based tasks.

Why it matters to CIOs and CTOs:

Agentic AI offers digital teammates that can scale alongside your workforce. From customer service and operations to software development and marketing, they enable a new model of hybrid human–AI collaboration.

Isaac Platform for Robotics: Simulation-First Automation

What was announced:

NVIDIA’s Isaac platform was updated with Groot Dreams, a synthetic data generator for training robots via simulation. It supports humanoids, industrial robotics, and autonomous vehicles — with pre-trained models, digital twins, and a real-time physics engine (Newton).

Problem it solves:

Training robots in the real world is expensive and slow. Data collection, safety testing, and skill transfer are major hurdles to scale.

Why it matters to CIOs and CTOs:

For industries exploring robotics-as-a-service or automation, Isaac shortens deployment timelines and improves training safety. You can now teach robots in digital environments — before sending them to your warehouse, plant, or city.

Digital Twins with Omniverse: De-Risking Physical Buildouts

What was announced:

Omniverse is being used by leading manufacturers (TSMC, Foxconn, Delta) to simulate full-scale digital twins — including factory layouts, robotic workflows, and energy systems. It integrates physics-based simulation, CAD imports, and AI-trained agents in real-time.

Problem it solves:

Planning factories, data centres, and new sites without simulation leads to high CapEx, delays, and inefficiency. Physical rework costs time and millions.

Why it matters to CIOs and CTOs:

If you're overseeing transformation, expansion or new buildouts, digital twins can simulate the impact of every decision — from layout to cooling to robotic coordination. It’s a risk mitigation tool that directly links infrastructure to business outcomes.

Real-World Example: What This Looks Like in Practice

Let’s say you’re a global manufacturer needing to:

- Reduce downtime and energy waste

- Address skilled labour shortages

- Automate warehouse or factory tasks

- Shorten product development cycles

With NVIDIA’s new stack:

- Use Omniverse to simulate and optimise operations before physical rollout.

- Let your teams experiment with DGX Spark — no cloud delays, no GPU queues.

- Deploy agentic AI to support logistics, design, or customer engagement.

- Train industrial robots in Isaac’s physics-based simulators — and scale faster.

This is already being deployed by NVIDIA partners — not years away, but today.

Why This Matters for CIOs and CTOs

“We're not just creating the next generation of IT,” Huang said. “We’re building a new industry.”

| Challenge You Face | What NVIDIA Just Delivered |

|---|---|

| Legacy data centres can’t scale AI | AI factories built for token-based, high-throughput reasoning |

| Rigid vendor stacks and inflexibility | MVLink Fusion supports modular, composable infrastructure |

| High cost of experimentation | DGX Spark and RTX Pro bring compute to the developer’s desk |

| Workforce productivity plateau | Agentic AI introduces scalable digital teammates |

| Automation bottlenecks in robotics | Isaac + simulation-based training accelerates deployment |

| High-risk infrastructure investments | Omniverse-powered digital twins reduce design, build risk |

On the Ground in Taiwan: NEXTDC CEO Craig Scroggie’s Key Observations

Insights from the frontline of global innovation — as shared by NEXTDC CEO Craig Scroggie

After being on the ground in Taiwan last week, one thing is clear: AI is outpacing traditional infrastructure cycles. The global technology ecosystem is no longer operating on theoretical roadmaps — it’s deploying, scaling, and redefining value in real time. As Craig Scroggie put it:

“If you can’t build at the speed of demand, you’re out of the race before it starts.”

We’re not planning in megawatts anymore. We’re entering a new era of gigawatt-scale strategy, where infrastructure readiness is the gating factor for progress. These aren’t future-state concepts, they’re live projects demanding sovereign, high-density infrastructure, deployed at unprecedented speed.

Australia’s role in this transformation is becoming increasingly strategic. It’s energy-secure, geopolitically aligned, and tightly connected to Asia, positioning it as one of the world’s most valuable landing zones for AI infrastructure investment. Global technology leaders are already responding.

“Export restrictions don’t suppress capability, they accelerate independence. The more you limit access, the faster alternative ecosystems rise.”

This is not a theory. It’s a shift in execution. Infrastructure players are shifting toward new economic models, where AI offtake commitments become the modern equivalent of power purchase agreements. When customers commit, capital flows and scale becomes real.

“AI factories are the new engines of the global economy, turning raw data into tokens, and tokens into intelligence.”

“In this era, the unit of production isn’t code or compute, it’s tokens. This is how knowledge is now manufactured.”

This keynote wasn’t about performance metrics or launch dates, it reframed the entire enterprise computing stack. From silicon to simulation, it made one thing clear: AI is no longer a feature. It’s the foundation.

For CIOs and CTOs, the Path Forward Is Clear:

The next wave isn’t just intelligent infrastructure it’s infrastructure that thinks. And those who build it first will shape everything that comes next.

-

Modernise infrastructure around scalable inference and token generation

-

Equip teams to build and deploy AI at the edge, not just in the cloud

-

Rethink enterprise IT architectures for a world of digital workers and autonomous systems

-

De-risk transformation through simulation-first strategies and digital twins

The next wave is infrastructure that thinks. Those who build it early will define what comes next.

The AI race is real — are you building fast enough?

Discover how NEXTDC is enabling gigawatt-scale, high-density, sovereign infrastructure, certified as an NVIDIA DGX-Ready Data Center, built for token generation and scalable AI inference.

Explore our AI-Ready Data Centres or speak with our Sales Team today.

%20(12).png)

%20(6).png)