The AI Factory Era: Reshaping Digital Infrastructure for Hyperscalers

Artificial intelligence is not just a technological advancement; it's ushering in a fundamental paradigm shift in how we conceive and construct digital infrastructure. The familiar landscape of traditional data centres is rapidly giving way to "AI Factories" vast, software-defined computing complexes engineered to manufacture intelligence as their primary product, much like their industrial counterparts produce goods.

NVIDIA CEO Jensen Huang perfectly articulates this profound metamorphosis: "AI is now infrastructure, and this infrastructure, just like the internet, just like electricity, needs factories... They're not data centers of the past... They are, in fact, AI Factories. You apply energy to it, and it produces something incredibly valuable... called tokens."1 In essence, these next-generation facilities are relentless engines, consuming power and data to generate machine learning models, real-time predictions, and autonomous digital agents at a scale previously unimaginable. As hyperscalers aggressively retool for this AI-driven era, digital infrastructure is no longer merely supportive; it's emerging as the very scaffolding of the Fourth Industrial Revolution, where compute dictates capital investment, and data solidifies its position as the world's most invaluable resource.

Navigate through the critical dimensions shaping the AI Factory era and the future of digital infrastructure:

-

Hyperscale Demands: 600kW Racks and Trillion-Token Workloads

-

Redefining Priorities: Speed, Scale, Sovereignty, Sustainability, Security (The “5S” Model)

-

NEXTDC: Your Strategic Partner for Next-Generation AI Infrastructure

From Data Centres to AI Factories: NVIDIA’s Vision

NVIDIA has been at the forefront of articulating the AI Factory concept. According to NVIDIA’s own definition, “an AI Factory is a specialised computing infrastructure designed to create value from data by managing the entire AI life cycle, from data ingestion to training, fine-tuning, and high-volume inference”2

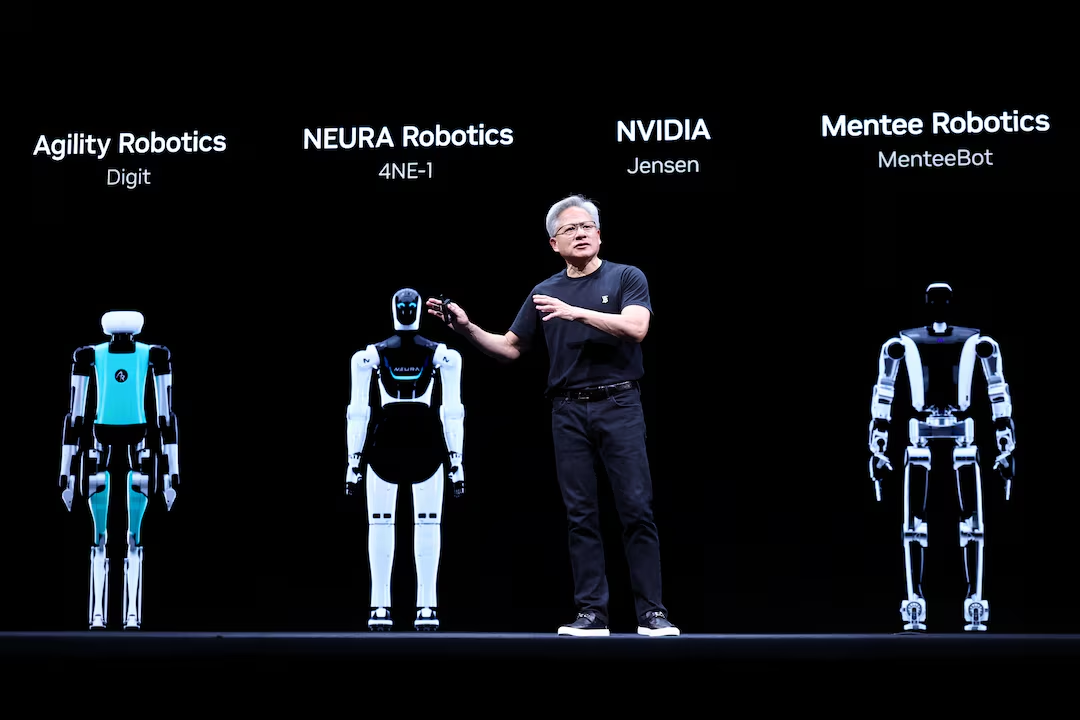

In this model, the “product” is intelligence itself, measured in things like token throughput (the rate at which AI models process data). The idea is that every major enterprise will eventually run an AI Factory alongside its traditional operations1. Huang even predicts that in the future “every company will have a secondary ‘AI Factory’ in parallel to their manufacturing plants”3 a bold claim underlining how integral AI capabilities will become across industries.

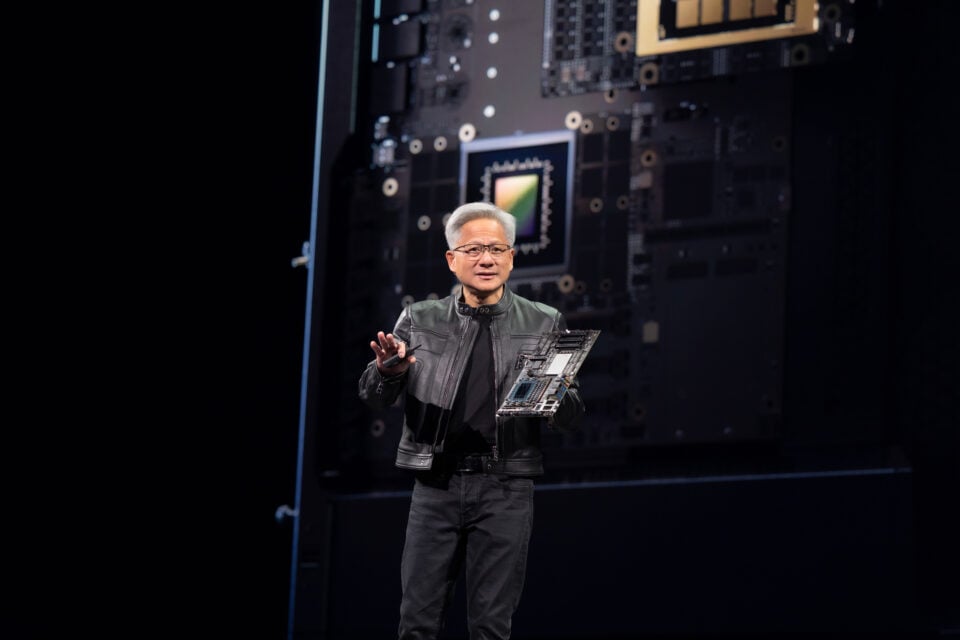

This vision reframes the data centre from a passive warehouse of computers to an active, integrated AI production line. “The modern computer is an entire data center,” Huang noted, emphasizing that NVIDIA now “treat[s] the whole data centre as one computing unit.”4 In practice, that means tightly coupling thousands of GPUs with high-speed networking, storage and software into cohesive systems purpose-built for AI. “We’re not building servers we’re building AI factories,” Huang said of NVIDIA’s strategy4

This shift towards viewing the data centre itself as the computer is revolutionary. It blurs the lines between hardware and software, between on-premises and cloud, and between a product and the infrastructure that produces it. Just as the electrification of industry in the 20th century required new power stations and grids, the rise of AI as a ubiquitous service is giving birth to a new class of digital factories.

Hyperscale Demands: High Density Racks and Trillion-Token Workloads

Meeting the needs of advanced AI at hyperscale requires orders-of-magnitude leaps in infrastructure capability. The numbers are staggering. Today’s state-of-the-art AI supercomputers already consume tens of megawatts; tomorrow’s could draw hundreds of megawatts or more6. NVIDIA’s latest reference designs, revealed in 2025, point toward individual rack enclosures powering 500+ GPUs and drawing 600 kilowatts each, roughly 5× the power of the highest-density racks in use today. For context, a single 600kW AI rack can consume more electricity in a couple of hours than an average family home uses in a month. Huang even hinted that megawatt-class racks are on the horizon, suggesting 600kW is merely a stepping stone6.

Advanced liquid cooling infrastructure is becoming essential. As modern AI chips push past 700W each, rack power densities are soaring from, 15kW (typical in older enterprise data centres) to well over 100kW today and up to 600kW per rack in the near future. Traditional air cooling can’t handle this heat, so AI Factories employ liquid cooling, rear-door heat exchangers, and other innovations to keep these “digital furnaces” running efficiently.

This ultra-high-density trend is exemplified by NVIDIA’s new “Kyber” NVL576 rack design, previewed at GTC 2025, which packs 576 GPUs (in four pods) and delivers up to ~15 exaflops of AI performance in a single rack5. Such a rack draws about 600kW of power, meaning data centre operators must deliver five times the power per rack compared to the previous generation (the current Blackwell-based racks draw ~120kW each)5. To put it bluntly, power delivery and cooling have become the limiting factors. “Every single data center in the future is going to be power-limited,” notes Wade Vinson, NVIDIA's Chief Data Center Engineer, explaining that a facility’s AI throughput and revenue potential, will be capped by how much power (and cooling) it can supply6. NVIDIA even measures “grid-to-token” conversion efficiency meaning how efficiently an AI Factory turns watts into trained models and inferences. Any watt not spent on computation is essentially lost productivity (and lost opportunity) for AI at scale6.

These realities are driving a renaissance in data centre engineering. Liquid cooling has moved from niche HPC deployments to a mainstream requirement for AI infrastructure. Direct chip cooling, two-phase immersion baths, and rear-door heat exchangers are deployed to dissipate heat that air cooling simply cannot handle. For example, cooling vendors like Accelsius and CoolIT have demonstrated liquid systems supporting 4,500W per GPU and racks above 250kW, while keeping temperatures in check even with warm (40°C) coolant a feat impossible with legacy air cooling8. In fact, analysts project a 14% annual growth in data centre power and cooling investments through 2029, reaching $61 billion globally7, as operators race to accommodate these dense AI workloads.

Racks that used to draw 5–15kW (sufficient for web and enterprise apps) now routinely require 100–200kW for AI clusters a paradigm shift in facility design. This isn’t simply adding more servers; it means redesigning electrical and cooling topologies, increasing floor loading capacities (since a rack can weigh thousands of kilograms when filled with GPUs and cooling gear), and even exploring new energy sources. Hyperscalers are investigating on-site power generation and alternatives like small modular nuclear reactors to feed their AI factories in the 2030s5.

Crucially, scale itself has become a competitive advantage. Jensen Huang quipped that “these are gigantic factory investments… the more you buy, the more you make,” referring to the virtuous cycle of AI compute more GPUs yield better models, which attract more users and revenue, which in turn fund even larger clusters1. It’s no surprise, then, that we’re seeing hyperscalers and nations announce massive AI infrastructure projects. At Computex 2025, Nvidia and Foxconn, with support from the Taiwanese government, unveiled plans for a new AI factory supercomputer in Taiwan with 10,000 GPUs (based on next-gen Blackwell architecture)4. This 100 MW+ facility will deliver AI capacity on the order of exaflops, positioning Taiwan as a global leader in AI R&D and providing a sovereign capability for its tech industry4.

Similar hyperscale AI data centers are being built or planned worldwide, from North America and Europe to Asia – often in close partnership with cloud providers and local authorities. Each one is effectively an AI factory pumping out advanced models and services, and their success will depend on how well their infrastructure can feed the never-ending appetite of AI for more compute.

Redefining Priorities: Speed, Scale, Sovereignty, Sustainability, Security (The “5S” Model)

The emergence of AI factories is causing IT and data centre leaders to rethink their priorities. In the past, one might plan a data centre around total capacity (square footage, total megawatts, etc.) and cost efficiency. Today, five strategic priorities, call them the 5S, have come to the forefront for hyperscalers building and operating AI infrastructure:

Speed: In the context of AI factories, speed has multiple meanings. Firstly, time-to-value is critical – how quickly can you train a new model or deploy additional capacity when demand spikes? Hyperscalers now compete on how fast they can stand up new GPU clusters or roll out AI services. Cloud-native AI platforms emphasise rapid provisioning and minimal friction: for example, offering on-demand GPU capacity by the hour, with ready-to-use AI frameworks, so that development teams can move at the pace of innovation. Executives need to ensure their infrastructure (and partners) can deploy at hyperspeed both in terms of provisioning hardware and enabling fast data throughput. High-performance connectivity (low-latency networks, edge locations near users) also comes into play, since model training and inference happen in real-time. In short, if your AI factory can’t keep up with the speed of experimentation and user demand, innovation will occur elsewhere.

Scale: AI workloads that once ran on a few servers now run on thousands of GPUs in parallel. Scale isn’t just about big data centres, it’s about seamless scaling within and across facilities. Hyperscale AI factories must support petaflops to exaflops of compute, millions of simultaneous model queries, and training runs involving trillions of parameters. This requires architectural designs that are modular and replicable. For instance, NVIDIA's reference AI Factories are built from “pods” or blocks of GPUs that can be cloned and interconnected by the hundreds5. Cloud providers talk about availability zones dedicated to AI, and “AI regions” popping up where there is abundant power. The goal is to scale out AI compute almost as a utility, adding more AI Factory floor with minimal disruption. Scale also implies having a global footprint: hyperscalers like AWS, Google, Alibaba, etc., are extending AI infrastructure to more regions to serve local needs while balancing workloads worldwide. If an AI service suddenly needs 10× more capacity due to a viral app or a breakthrough model, the infrastructure should scale without months of delay. As Huang revealed, NVIDIA even provided a 5-year roadmap visibility to partners because building out AI-ready power and space requires long lead times4. Leading data centre operators are now planning for 100+ MW expansions preemptively to ensure scale is never a bottleneck to innovation.

Sovereignty: Data sovereignty and infrastructure sovereignty have become critical in the age of AI. As AI systems get deployed into sensitive domains, from healthcare diagnostics to national security – where and under whose laws the data and models reside is a top concern. Hyperscalers must navigate a patchwork of regulations that increasingly demand certain data stay within national borders, or that AI workloads be processed in locally controlled facilities for privacy and strategic reasons. The recent push for “sovereign cloud” offerings in Europe and elsewhere reflects this trend. For AI Factories, sovereignty can mean choosing data centre locations to meet regulatory requirements and customer trust expectations. It’s no longer just about tech specs, but also about geopolitical and compliance positioning. For example, European cloud users might prefer (or be required by law) to use AI infrastructure hosted in the EU by EU-based providers. In China, AI infrastructure must be locally hosted due to strict data laws. Even within countries, certain government or enterprise workloads demand sovereign-certified facilities those vetted for handling classified data or critical infrastructure roles. As NEXTDC notes, “Where your AI infrastructure lives isn’t just a technical choice, it’s a competitive one. Latency, compliance, and sustainability are all shaped by location… Neoclouds choose sites based on a strategic mix of low latency, data sovereignty and energy resilience. In practice, this means hyperscalers are investing in regions they previously left to partners and partnering with local data centre specialists to ensure sovereign coverage. The AI factory revolution will not be one-size-fits-all globally; it will be a network of regionally attuned hubs that balance global scale with local control.

Sustainability: The power-hungry nature of AI has put sustainability at the centre of the conversation. Boards and governments are increasingly scrutinising the energy and carbon footprint of AI operations. A single large AI training run can consume as much electricity as hundreds of homes; scaled across many runs, AI could significantly impact corporate and national energy goals. Hyperscalers are acutely aware that any perception of AI as “wasteful” or environmentally harmful could draw regulatory or public backlash, not to mention the direct impact on energy costs. Thus, the new mantra is “performance per watt” and designing for efficiency from the ground up. Leading cloud data centres are committing to 100% renewable energy (through solar, wind, hydro or even emerging nuclear partnerships5) to power AI factories. They’re also adopting advanced cooling to reduce waste – e.g. liquid cooling can slash cooling power overhead and even enable heat reuse, improving PUE (Power Usage Effectiveness) dramatically. Every aspect of facility design is under the microscope for sustainability, from using sustainable building materials to implementing circular economy principles for hardware (recycling and reusing components). Importantly, hyperscalers are now reporting metrics like “carbon per AI inference” or “energy per training run” as key performance indicators. The next generation of data centres will be judged not just on capacity, but on efficiency as one NEXTDC report put it, “the next generation of Neoclouds won't just be measured by performance alone; they'll be judged by efficiency… boards, regulators and customers are asking: Where is the energy coming from? How efficient is your data centre? What is the carbon impact per GPU-hour?”. To remain competitive (and compliant), AI factories must be sustainable by design, aligning with global net-zero ambitions and corporate ESG commitments. Sustainability is no longer a nice-to-have Corporate Social Responsibility (CSR) item; it’s a core design principle and differentiator in the AI era.

Security: With AI becoming a backbone for everything from financial services to autonomous vehicles, the security of AI infrastructure is paramount. Here we mean both cybersecurity and physical security/resilience. On the cyber side, AI workloads often involve valuable training data (which could include personal data or proprietary information) and models that constitute intellectual property worth billions. Protecting these from breaches is critical, a compromised AI model or a disrupted AI service can cause immense damage. Hyperscale AI factories are targets for attackers ranging from lone hackers to state-sponsored groups, all seeking to steal AI tech or sabotage services. This means investing in robust encryption (for data at rest and in transit), secure access controls, continuous monitoring powered by AI itself, and isolated compute environments (to prevent one client’s AI environment from affecting another’s in multi-tenant clouds). On the physical side, downtime is unacceptable, an AI Factory outage could halt operations for a business or even knock out critical infrastructure (imagine if an AI-driven power grid or hospital network fails). Thus, AI data centres are built with extreme redundancy and hardened against threats. Many pursue Tier IV certifications for fault tolerance, and features like on-site backup power for days, multi-factor access controls, and even EMP or natural disaster protection in some cases. Additionally, supply chain security has emerged as a concern: ensuring that the chips and software powering AI are free from backdoors or vulnerabilities (which ties back to sovereignty as well). Security by design is a must. As one NEXTDC customer put it, their clients “rely on the ability to run AI-powered applications without interruption, for as long as they need”, so having a partner that can guarantee uptime and flexibility is crucial. In practice, hyperscalers are choosing colocation providers and designs that emphasize robust risk management, from certified physical security controls to comprehensive compliance with standards (ISO 27001, SOC 2, etc.). In the AI Factory age, a security breach or prolonged outage isn’t just an IT issue; it’s a business-critical incident. Therefore, security and resilience permeate every layer of the 5S model, underpinning speed, scale, sovereignty, and sustainability goals with a foundation of trust and reliability.

In summary, these 5S priorities are shaping decisions at the highest levels. Hyperscaler CIOs and CTOs are now asking: Can our infrastructure deploy new AI capacity fast enough (Speed)? Can it grow to the scale we’ll need next year and five years from now (Scale)? Do we have the right locations and partnerships to meet data jurisdiction and governance needs (Sovereignty)? Are we minimising our environmental impact and energy risk even as we expand (Sustainability)? And can we guarantee security and resilience end-to-end so that our AI services never falter (Security)? The AI Factory era compels a holistic approach – success will come from excelling across all five dimensions, rather than optimising for just one. In practice, this means designing data centre solutions that are agile and fast, massively scalable, locally available and compliant, green and efficient, and rock-solid secure. That’s a tall order – but it’s exactly what the leading innovators are now building.

NEXTDC: Your Strategic Partner for Next-Generation AI Infrastructure

As AI workloads push data centre requirements into a new era, NEXTDC isn't just keeping pace; we're redefining what's possible for hyperscalers in APAC. We deliver the foundational pillars you need most: unprecedented density, scalable capacity, ironclad security, and accelerated deployment velocity—all underpinned by sovereign-grade and genuinely sustainable infrastructure. We don't just provide space; we deliver the AI factories Jensen Huang envisions.

Engineered for AI: Factory-Ready, Certified & Deployed

Our infrastructure isn't merely "AI-compatible"; it's purpose-built for the extreme demands of AI.

- 600kW Per Rack Ready: We're designing today's densest GPU workloads with infrastructure engineered for next-generation AI clusters. This includes advanced direct-to-chip liquid cooling and sophisticated hybrid solutions, providing the power and thermal management vital for your most compute-intensive operations.

- NVIDIA DGX-Certified Partner: As an official DGX-Ready Data Centre partner, we ensure seamless and validated deployment of NVIDIA's highest-performance AI platforms. This partnership means your NVIDIA investments deliver maximum performance from day one.

National Dominance, Regional Reach

Our strategic footprint provides both the stability of a mature market and the critical connectivity for regional expansion.

- AI-Ready in Every Australian Capital City: Benefit from our 100% uptime SLA and Tier III+ and Tier IV certified data centres across all major Australian capitals Sydney, Melbourne, Brisbane, Perth, Adelaide, Canberra, and Darwin. This national network offers unparalleled resilience and sovereign compliance for your core AI deployments.

- Asia Expansion: Our new builds in Tokyo, New Zealand and Kuala Lumpur strategically extend NEXTDC's platform across APAC. These locations are ideal for hyperscalers scaling GPU-as-a-Service, providing critical hubs for low-latency AI service delivery across the region.

Unrivalled Performance & Connectivity, Everywhere

Optimising AI performance demands a network without limits.

- Cloud-Neutral Ecosystem: Leverage our carrier-dense environment with interconnects up to 100Gbps and direct on-ramps to all major cloud platforms. This ensures your AI workloads benefit from ultimate flexibility and low-latency access to public cloud resources.

- Subsea Ready: Our direct access to international subsea cable landing stations guarantees ultra-low-latency AI performance across borders, essential for distributed AI training and global inference.

Trusted by AI Leaders, Recognised by the Industry

Our commitment to excellence in AI infrastructure is proven by those who rely on us and those who award us.

- Chosen by AI Pioneers: Leading innovators like SharonAI and other AI pioneers trust NEXTDC for their mission-critical AI operations, a testament to our reliability and advanced capabilities.

- Award-Winning Platform:

- PTC 2025 Innovation Award – Best Data Centre Platform: Recognised for pushing the boundaries of infrastructure.

- Frost & Sullivan – Recognised for Innovation and Customer Value: Affirming our market leadership and client-centric approach.

- Uptime Institute – Tier IV Certified Across Multiple Cities: The highest standard in design and operational sustainability, ensuring maximum uptime for your most demanding AI workloads.

Our facilities offer the stability, sustainability, and strategic edge needed to build the future of AI. With NEXTDC, you don't just get data centres; you gain a purpose-built AI Factory ready for what's next.

Continue your journey: Read “The AI Power Surge: What Every CIO, CTO and Executive Needs to Know About Infrastructure Readiness”

Then, when you're ready to translate those insights into a tailored strategy for your next-generation AI platform, talk with one of our expert sales team today to map out your bespoke AI infrastructure roadmap and secure your competitive advantage.

Speak with an AI Factory Sales Specialist today.

Source:

1. Caulfield, Brian. “NVIDIA CEO Envisions AI Infrastructure Industry Worth ‘Trillions of Dollars.’” The Official NVIDIA Blog, May 18, 2025. https://blogs.nvidia.com/blog/computex-2025-jensen-huang/#:~:text=%E2%80%9CAI%20is%20now%20infrastructure%2C%20and,%E2%80%9D.

2. NVIDIA. “What Is an AI Factory?” NVIDIA Glossary. Accessed June 9, 2025. https://www.nvidia.com/en-us/glossary/ai-factory/.

3. Confino, Paolo. “Jensen Huang Says All Companies Will Have a Secondary ‘AI Factory’ in the Future.” Fortune, April 30, 2025. https://fortune.com/article/jensen-huang-ai-manufacturing/.

4. Bolaji, Junko Yoshida. “COMPUTEX 2025: Nvidia CEO Huang Outlines Vision for AI Infrastructure.” Embedded.com, May 22, 2025. https://www.embedded.com/computex-2025-nvidia-ceo-huang-outlines-vision-for-ai-infrastructure/.

5. Williams, Wayne. “Megawatt-Class AI Server Racks May Well Become the Norm before 2030 as Nvidia Displays 600kW Kyber Rack Design.” TechRadar Pro, March 30, 2025. https://www.techradar.com/pro/megawatt-class-ai-server-racks-may-well-become-the-norm-before-2030-as-nvidia-displays-600kw-kyber-rack-design.

6. Vincent, Matt. “Inside NVIDIA’s Vision for AI Factories: Wade Vinson’s Data Center World 2025 Keynote.” Data Center Frontier, April 30, 2025. https://www.datacenterfrontier.com/machine-learning/article/55286658/inside-nvidias-vision-for-ai-factories-wade-vinsons-data-center-world-2025-keynote.

7. Data Center Frontier Staff. “CoolIT and Accelsius Push Data Center Liquid Cooling Limits Amid Soaring Rack Densities.” Data Center Frontier, April 11, 2025. https://www.datacenterfrontier.com/cooling/article/55281394/coolit-and-accelsius-push-data-center-liquid-cooling-limits-amid-soaring-rack-densities.

%20(6).png)