The Race for AI Leadership: Rethinking Hyperscale Infrastructure in APAC

Across Asia-Pacific, hyperscalers are accelerating their AI infrastructure strategies identifying new growth markets, evaluating critical infrastructure capabilities, and preparing to deploy next-generation workloads at unprecedented scale. This aggressive push is driven by the insatiable demand for cutting-edge AI services, from the computational intensity of generative AI and large language models (LLMs) to the rapid-fire requirements of real-time inference and complex scientific simulations. The region, with its vast and growing digital economies, diverse regulatory landscapes, and burgeoning talent pools, presents both immense opportunity and unique infrastructure challenges.

Informed by extensive industry conversations, powerful infrastructure demand signals, and recurring themes across the hyperscale ecosystem, we observe a distinct shift in priorities. These aren't just incremental adjustments; they represent fundamental re-evaluations of what constitutes an optimal data centre partner in the age of AI. The race to achieve AI dominance in this dynamic region means that traditional data centre metrics, once sufficient, now fall critically short.

Explore the key themes and strategic imperatives shaping the future of AI infrastructure in APAC:

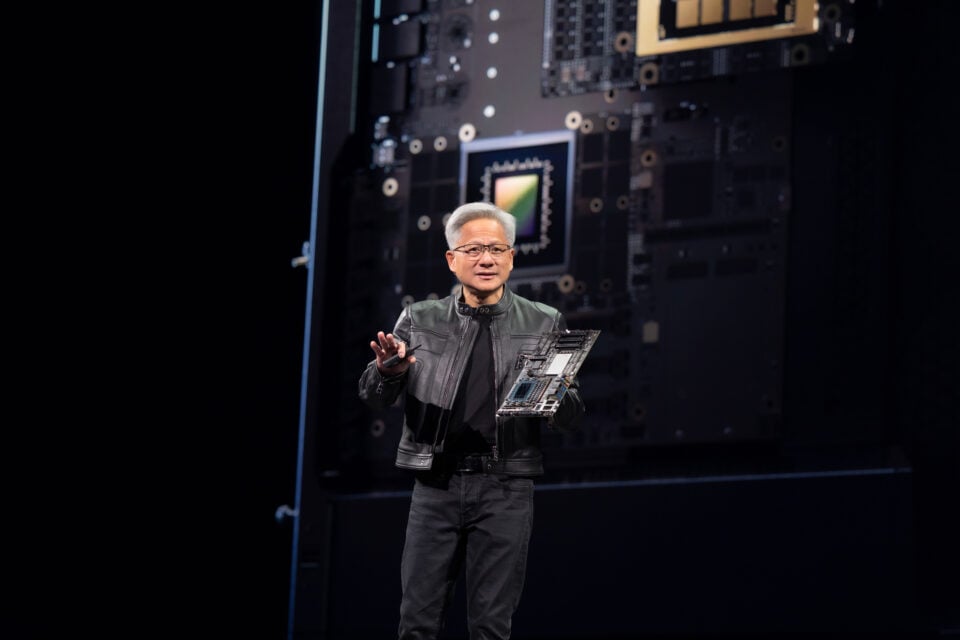

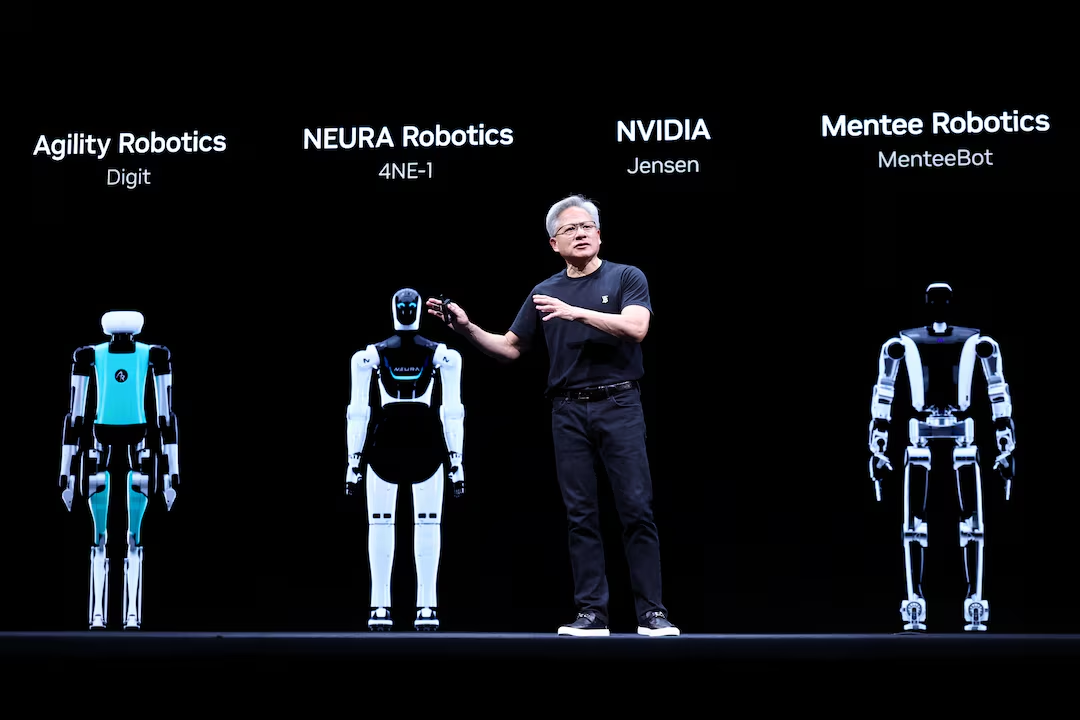

Jensen Huang's Vision: The Imperative for "AI Factories" in APAC

NVIDIA CEO Jensen Huang has been a leading voice in articulating the transformative shift in computing. His vision for the future of AI infrastructure resonates deeply with the strategic imperatives now facing hyperscalers in APAC. Huang maintains that AI isn't merely a new application; it's a new form of industrial infrastructure, akin to electricity or the internet that demands "AI Factories."1

These "AI Factories," as Huang describes them, aren't the data centres of the past. They're specialised, purpose-built facilities designed to efficiently produce intelligence, consuming vast amounts of energy to generate "tokens." His core messages for organisations looking to scale AI directly inform the rigorous criteria hyperscalers are now applying to their APAC deployments:2

- Embrace "AI Factories" as the New Computing Infrastructure: Huang stresses that traditional data centres are fundamentally unsuited for the demands of modern AI. Hyperscalers must invest in infrastructure designed from the ground up for continuous, high-density AI production.

- Accelerate Everything with Full-Stack Computing: With "general purpose computing running out of steam," Huang advocates for accelerated computing, leveraging GPUs and specialised networking – coupled with a robust software stack to achieve unprecedented speed and efficiency.

- Prioritise Efficiency and Sustainability: Huang highlights the need for solutions that drive down the "total cost of ownership" by maximising performance per watt and ensuring sustainable operations, even as compute consumption explodes.3

- Partner to Build, Don't Just Buy: Recognising the complexity, Huang emphasises that building these "AI Factories" often requires deep partnerships with providers who can deliver integrated, full-stack solutions.

- Strategic & Sovereign Deployment: Implicit in his discussions on national AI strategies, Huang underlines the need for secure, compliant, and geopolitically agile infrastructure.

These aren't just theoretical concepts; they are the bedrock upon which hyperscalers must build their competitive advantage in APAC.

AI at Scale: The 10 Critical Infrastructure Imperatives for Hyperscalers

Today, ten key priorities are acutely shaping their strategic direction, reflecting the complex and demanding nature of AI at scale:

1. Unprecedented Power & Density Requirements

The exponential growth of AI workloads, particularly for training, demands power densities far exceeding traditional data centre capabilities, often reaching hundreds of kilowatts per rack. Hyperscalers need facilities designed from the ground up to support this, with clear pathways to even higher future requirements like 1MW+ per rack. This isn't just about megawatts; it's about the very physics of power delivery, sophisticated in-rack power management, and redundant power distribution to ensure continuous operation. Without this foundational capability, hyperscalers face immediate caps on their AI investments, leading to underutilised hardware and missed market opportunities.

2. Advanced Thermal Management Solutions

Closely linked to power, the intense heat generated by AI compute (e.g., NVIDIA H100s, B200s, GB200 NVL72) makes conventional air cooling obsolete. Hyperscalers are urgently seeking data centres that have either implemented or can rapidly deploy advanced liquid cooling solutions, including direct-to-chip (D2C) and potentially immersion cooling, along with robust cooling redundancy (N+1/2N) to maintain stable operating temperatures and prevent throttling. For hyperscalers, inadequate cooling directly compromises AI chip performance, stability, and lifespan, translating into reduced compute efficiency and higher operational costs.

3. Ultra-Low Latency & Robust Interconnectivity

AI's real-time inference needs and the massive data transfers inherent in training require networks that are not only high-bandwidth but also exceptionally resilient and low-latency. Physical proximity to major internet exchange points (IXPs), leading cloud on-ramps, and diverse, redundant fibre routes becomes paramount. The internal data centre network must also be high-bandwidth (e.g., InfiniBand, 400GbE) to support rapid GPU-to-GPU communication within AI clusters. Network bottlenecks or high latency will cripple AI application performance for hyperscalers, hinder data-intensive training, and undermine the user experience, jeopardising competitive advantage.

4. Rapid Deployment Velocity & Scalability

The AI landscape is evolving at breakneck speed. Hyperscalers need to deploy new infrastructure, scale existing clusters, and iterate on models faster than ever to maintain a competitive edge. Prolonged build times or slow provisioning cycles can equate to significant lost market opportunity. They require partners who can deliver ready-to-deploy, AI-optimised infrastructure swiftly, whether through build-to-suit engagements, rapidly provisioned modular solutions, or phased expansions, all backed by stringent Service Level Agreements (SLAs). This includes efficient logistics and secure staging for large-scale equipment deliveries. Speed to market directly translates to market share in AI; a slow or inflexible data centre partner will severely impede a hyperscaler's strategic expansion.

5. Verifiable Sustainability & Energy Efficiency

With increasing scrutiny on energy consumption and environmental impact, hyperscalers are prioritising data centres that demonstrate a clear commitment to renewable energy sources, industry-leading energy efficiency (low PUE), and effective water management. This includes direct procurement of green power, participation in verifiable renewable energy programmes, and a clear roadmap to net-zero operations, aligning with global climate goals. For hyperscalers, a focus on sustainability is no longer just PR; it's critical for meeting corporate ESG goals, reducing long-term operating costs, and maintaining a social licence to operate in key markets.

6. Stringent Sovereignty & Regulatory Compliance

Geopolitical considerations and evolving data privacy laws (e.g., similar to GDPR in various APAC nations) make data residency and regulatory compliance non-negotiable. Hyperscalers demand data centres that not only comply with local laws but also offer granular controls and verifiable assurances regarding data residency, access, and security, ensuring sensitive AI models and training data remain within specified geographic or jurisdictional boundaries. Non-compliance with data sovereignty and regulatory requirements can result in severe legal penalties for hyperscalers, reputational damage, and loss of critical market access.

7. Comprehensive Physical & Cyber Security

The immense value of AI intellectual property and sensitive data necessitates robust, multi-layered security. This includes stringent physical security measures like multi-factor authentication, biometric access controls, 24/7 surveillance, and highly trained on-site personnel. Equally vital are the data centre's internal cybersecurity posture, network segmentation policies, DDoS protection, and adherence to leading security certifications (e.g., ISO 27001). Protecting proprietary AI models and sensitive data from breaches, theft, or sabotage is paramount for hyperscalers to maintain competitive advantage and customer trust.

8. Vibrant Ecosystem Access & Partnerships

Seamless integration with the broader digital ecosystem is crucial. Hyperscalers look for data centres offering direct connectivity and peering with major cloud providers, telecommunications carriers, and other strategic partners. Equally important is access to subsea cable landing stations, which provide ultra-low-latency routes to international markets, global redundancy, and high-bandwidth capacity for data-intensive AI workloads. This level of interconnection ensures that hyperscalers can operate globally distributed models, collaborate across regions, and deliver seamless AI services at scale. A well-connected ecosystem, both locally and globally, amplifies a hyperscaler's reach, facilitates innovation partnerships, and accelerates the deployment of next-generation AI platform

9. High Operational Maturity & Support

For mission-critical AI operations, consistent reliability and expert support are paramount. This involves 24/7 engineering support with staff highly trained in managing hyperscale environments and advanced AI cooling systems. Sophisticated automation, real-time monitoring tools, and predictive analytics are essential for ensuring proactive operational excellence and minimising downtime. Furthermore, the facility must ensure its physical structure, including floor loading capacity, can support the extreme weight of fully loaded AI racks. Operational excellence directly translates to maximum uptime and efficiency for a hyperscaler's valuable AI hardware, safeguarding against costly disruptions and ensuring peak performance.

10. Specialised AI Workload Optimisation & Certification

Beyond general capabilities, data centres must demonstrate an understanding and architectural design specifically for AI. This includes being 'AI-ready' or 'DGX-Ready Certified' to meet NVIDIA's stringent requirements, with infrastructure explicitly optimised for intensive AI training workloads (ultra-high-density, high-speed interconnects) and low-latency AI inference at scale. The ultimate goal is to function as an "AI Factory," enabling the full AI lifecycle from model development to deployment. Partnering with an AI-specialised data centre ensures hyperscalers' infrastructure is purpose-built to unleash the full potential of their AI investments, driving faster innovation and operational superiority.

Your Strategic Partner in AI Scale

The path to successfully deploying and scaling AI infrastructure is fraught with unique challenges, but also immense opportunities. For hyperscalers, ignoring any of these ten imperatives risks undermining their significant AI investments and ceding competitive advantage. As the demands of AI continue to evolve, selecting a data centre partner capable of delivering on all these fronts is no longer a luxury, but a strategic necessity. It's about securing a foundation that not only meets today's extreme requirements but is inherently engineered for the AI breakthroughs of tomorrow.

Ready to Transform Your AI Infrastructure?

At NEXTDC, we're not just building data centres; we're architecting the AI Factories of the future. Our proven expertise in deploying infrastructure aligned to NVIDIA and partner specifications, combined with our national footprint, robust sovereign controls, and unparalleled performance-at-scale capabilities, makes us the ideal partner for your most demanding AI deployments.

Don't let infrastructure be a bottleneck to your AI ambitions.

Connect with a NEXTDC Sales Team Click to schedule a tailored strategy session.

OR

Download our AI Data Centre Evaluation Scorecard to benchmark your current and future sites against the needs of next-generation AI.

Source:

1. Katz, Darren. “The Intelligence Infrastructure Revolution: Inside Jensen Huang’s Vision for AI and Nvidia.” Tamim Asset Management. Last modified June 5, 2025. https://tamim.com.au/market-insight/ai-industrial-revolution-nvidia-jensen-huang/.

2. Emilio, Maurizio Di Paolo. “Computex 2025: NVIDIA CEO Huang Outlines Vision for AI Infrastructure.” Embedded.com, May 19, 2025. https://www.embedded.com/computex-2025-nvidia-ceo-huang-outlines-vision-for-ai-infrastructure/.

3. Mackereth, Oscar. “Accounting for AI: Financial Accounting Issues and Capital Deployment in the Hyperscaler Landscape.” Cerno Capital, April 10, 2025. https://cernocapital.com/accounting-for-ai-financial-accounting-issues-and-capital-deployment-in-the-hyperscaler-landscape.

%20(12).png)